breatHaptics: Enabling Granular Rendering of Breath Signals via Haptics using Shape-Changing Soft Interfaces

ACM Tangible and Embodied Interaction (TEI) 2024

Authors: Sunniva Liu, Jianzhe Gu, Dinesh K. Patel, Lining Yao

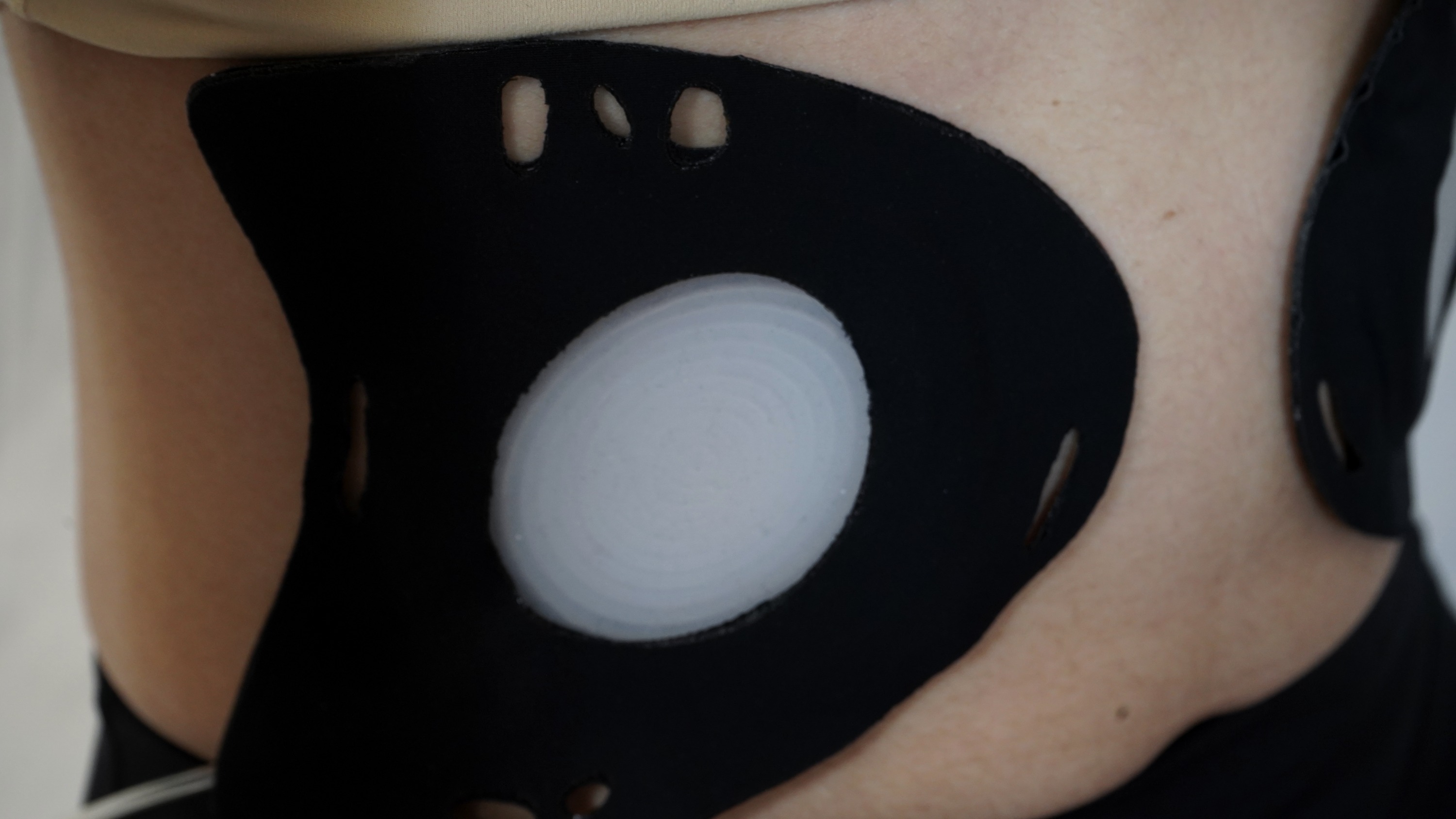

Feeling breath signals from the digital world has many values in remote settings. These signals have been visually or audibly represented in previous research, but recent advances in wearable technology now enable us to simulate breath signals via haptics, as an intimate and intuitive form of non-verbal interaction. Prior works relied on low-resolution methods of breath signal rendering and thus a limited understanding of associated haptic perceptions. Addressing this gap, our research introduces breatHaptics, a wearable that offers a high-resolution, haptic representation of breath signals. By utilizing extracted breath data, a mapping algorithm model and finely-tuned soft actuated materials, we deliver a granular sensation of human breath. Through a perception study involving force discrimination testing and haptic experience evaluation, we demonstrate breatHaptics' ability to create a rich, nuanced tactile sensation of feeling breath haptically. Our work illustrates the promising role of breatHaptics as part of wearable technologies in offering well-being support.

PDF> DOI >

#Haptic Wearables, Applied Machine Learning, Biofeedback